Investing time and effort into a 360-degree feedback program should lead to valuable insights, but many organizations encounter inconsistencies, contradictions, or a lack of actionable data. If your 360 degree feedback process doesn’t deliver meaningful improvements, it can frustrate participants and diminish trust in your feedback system.

A well-executed 360-degree feedback questionnaire ensures that 360-degree feedback data is clear, relevant, and constructive. By focusing on five essential criteria—validated methodologies, structured 360-degree feedback rater selection, confidentiality, psychometric reliability, and actionable follow-up—you can make your 360-degree feedback process a tool for leadership development and employee growth.

Why 360-Degree Feedback is Important

Unlike traditional 360-degree feedback and performance appraisals that rely solely on manager evaluations, 360-degree feedback provides a broader perspective. Incorporating feedback from multiple sources—such as direct reports, peers, and supervisors—enhances self-awareness, identifies strengths and areas for improvement, and aligns personal growth with organizational objectives. When organizations use 360-degree feedback as a development tool rather than solely for 360-degree feedback and behavior in performance management, they foster a culture of feedback that supports continuous employee development.

Key Criteria for Valid and Reliable 360-Degree Feedback

1. A Well-Designed 360-Degree Feedback Questionnaire

A robust feedback questionnaire is at the core of any successful 360 review process. It must align with the organization’s strategic goals and competencies, ensuring that the 360 survey remains relevant and actionable.

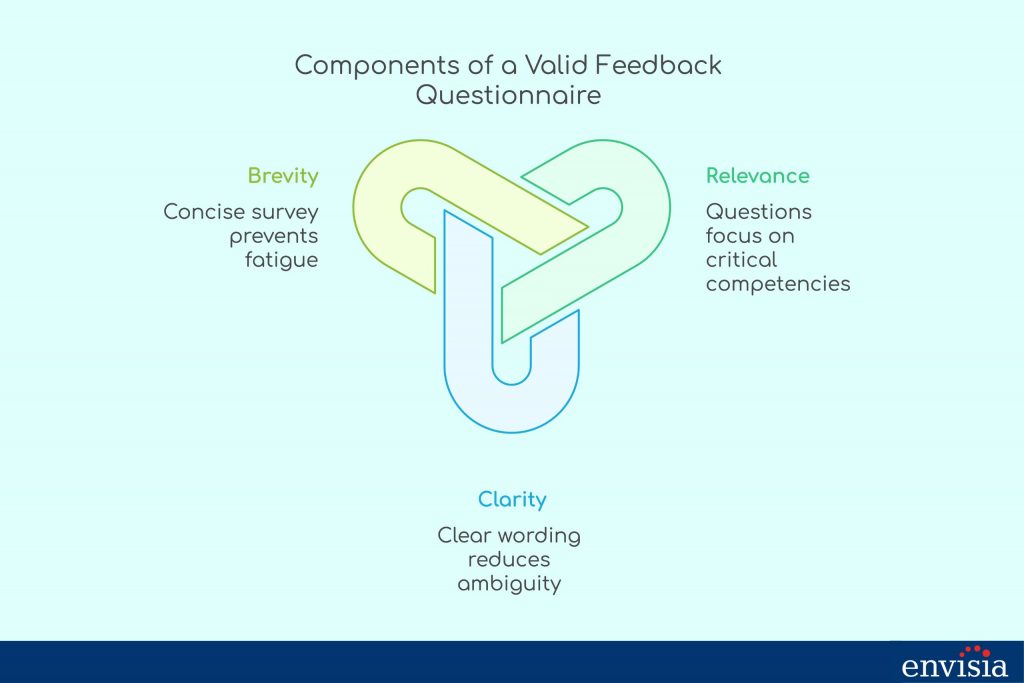

A valid questionnaire meets three criteria:

- Relevance – Each question should focus on observable behaviors tied to competencies critical to the role.

- Clarity – Clear wording reduces ambiguity, ensuring raters provide actionable feedback.

- Brevity – Surveys should be concise (30-40 questions) to prevent fatigue and improve response rates.

At Envisia Learning, our 360-degree feedback assessments are designed to capture precise feedback results while maintaining accessibility for all, including feedback recipients.

2. Selecting and Training the Right Raters

The validity of the process depends on selecting the right raters and training them to provide feedback that is constructive and unbiased. To ensure balanced insights, include feedback providers from different perspectives—supervisors, peers, and direct reports—who have worked with the feedback recipient for at least six months.

Raters must understand:

- How to deliver effective feedback focused on behaviors rather than personal traits.

- The importance of using a consistent rating scale.

- The necessity of open feedback while maintaining confidentiality.

Organizations must address these crucial areas to ensure raters feel comfortable when asked to provide feedback, making the 360-degree feedback process more reliable.

3. Ensuring Validity Through Psychometric Testing

To be certain that your 360 process is valid, organizations should apply psychometric principles to evaluate reliability and validity:

- Test-Retest Reliability – Ensures feedback data remains consistent over time when behaviors have not changed.

- Internal Consistency – Uses metrics like Cronbach’s alpha to confirm that items measuring the same questions and competencies are correlated.

- Criterion Validity – Determines whether the tool is valid by assessing its correlation with real-world performance metrics.

4. Confidentiality and Anonymity for Honest Feedback

For 360-degree feedback to be effective, raters must feel safe when providing honest responses. Anonymity in the feedback process fosters trust, encouraging open feedback from multiple sources. Organizations should:

- Use secure feedback platforms that protect participant identities.

- Clearly communicate how feedback data will be used.

- Establish a culture of feedback where employees trust the system can have a positive impact on employee engagement.

When feedback is effective and anonymous, individuals can confidently receive feedback and apply it to their development plan.

5. Actionable Reporting and Follow-Up

A 360 review system is only as valuable as the follow-up process. Reports should translate feedback data into actionable insights, highlighting strengths, growth areas, and next steps.

Best practices for follow-up include:

- Offering structured coaching and development plans or mentoring programs.

- Providing clear development plans with measurable milestones.

- Ensuring that feedback is integrated into ongoing performance discussions.

Envisia Learning’s Talent Accelerator platform helps participants develop their skills through guided coaching, making the 360 degree feedback process more impactful.

Advantages and Disadvantages of 360-Degree Feedback

Advantages

Successful 360 programs provide a holistic view of performance, foster self-awareness, and support leadership development. Benefits include:

- Highlighting blind spots that might otherwise go unnoticed.

- Encouraging constructive feedback from multiple perspectives.

- Aligning individual competencies with organizational objectives.

Disadvantages

Despite its benefits, there are challenges to consider. Some raters may introduce biases, and without proper follow-up, participants may not take the results seriously. Additionally, implementing a 360 feedback program requires time and resources.

At Envisia Learning, we mitigate these drawbacks by incorporating psychometrically validated tools and expert guidance to enhance feedback accuracy and effectiveness.

Best Practices for Implementing a Successful 360-Degree Feedback Program

- Use a Strengths-Based Approach – Focusing on strengths alongside development areas increases engagement and motivation.

- Invest in the Right 360-Degree Feedback Software – Quality software enhances data visualization and reporting.

- Align Feedback with Organizational Goals – Ensuring feedback items reflect key organizational competencies makes the process more relevant.

- Provide Coaching and Support Post-Feedback – Structured coaching helps feedback recipients integrate insights into daily performance.

The Future of 360-Degree Feedback

Advancements in AI, neuroscience, and behavioral analytics are shaping the future of multi-rater feedback systems. Organizations must continuously refine their approach to maintain valid and reliable assessments that drive employee employee growth and development.

Conclusion

A successful feedback program hinges on a tool that is valid and reliable, a well-structured feedback questionnaire, and actionable follow-up strategies. By implementing best practices and leveraging expert support, organizations can maximize the impact of their 360-degree feedback process.

Envisia Learning provides scientifically validated assessments and expert guidance to help organizations optimize their 360-degree feedback initiatives. Ready to elevate your 360-degree feedback program? Reach out to our team to explore how Envisia Learning can support your 360-degree feedback process.